I had the pleasure this week to travel to sunny Miami in order to attend EMNLP 2024 and present a paper at the Future of Event Detection workshop which I published with the R&D team at Babel Street, a Nodora Partners client. The type of paper we published is one of my favorites: where a simple, small tweak to an existing system can give it a new wind.

This research focused on a task in natural language processing called event extraction. Suppose you have a piece of text which says, “John called Sally last Tuesday.” An event extraction system might be able to take that text and produce a structured object like the following:

{

"event_type": "CALL",

"caller": "John",

"callee": "Sally",

"time": "last Tuesday"

}Now, this toy example is not terribly interesting, but one can imagine the applications for things like compliance screening if such an algorithm could be run on an entire news article (“stole money” could be an interesting event type to extract).

This task is receiving more and more attention in the NLP community (hence this new workshop at EMNLP), and it is particularly challenging for the simple reason that identifying events often requires navigating a lot of ambiguity and nuance (which translates to a lot of training data). For a more comprehensive overview of this topic, check out the talk I gave at HLTCon back 2021:

Now, in 2024, the field has progressed quite a bit, and we are able to use neural networks for this task thanks to the ability of LLMs to generalize much more than older language models could. While there are a few neural approaches to this problem, the one we focus on is known as DEGREE.

DEGREE’s insight was to formulate the structure shown above in a natural language format. Suppose we have the sentence “John met with Alice,” and we would like to check for the presence of a meeting event. We would then give the DEGREE model the following input1:

John met with Alice.

contact event, meet sub-type

The event is related people meeting.

Similar triggers such as meet, met.

The event’s trigger word is <Trigger>.

some people met at somewhere.Then, the DEGREE model would reply with the following output, which could be then parsed into a structure like the one above:

Event trigger is met.

John and Alice met somewhere.Why is this a clever approach? It takes advantage of the fact that LLMs understand natural language well, so it is more natural for them to implicitly do the information extraction by replying in natural language. DEGREE was state-of-the-art upon publication, but it later was dethroned by two other models.

Our insight was that DEGREE was being unfairly penalized compared to these new models. Imagine an input sentence like the following:

John met with Alice and then with Susan.The problem here is that there are two events in this sentence: one meeting between John and Alice and another between John and Susan. Unfortunately, DEGREE is only able to extract one of these, by design. Even worse, for performance reasons, DEGREE breaks apart input documents into groups of three sentences. That means that for every three-sentence chunk of the document, at most one event can be extracted!

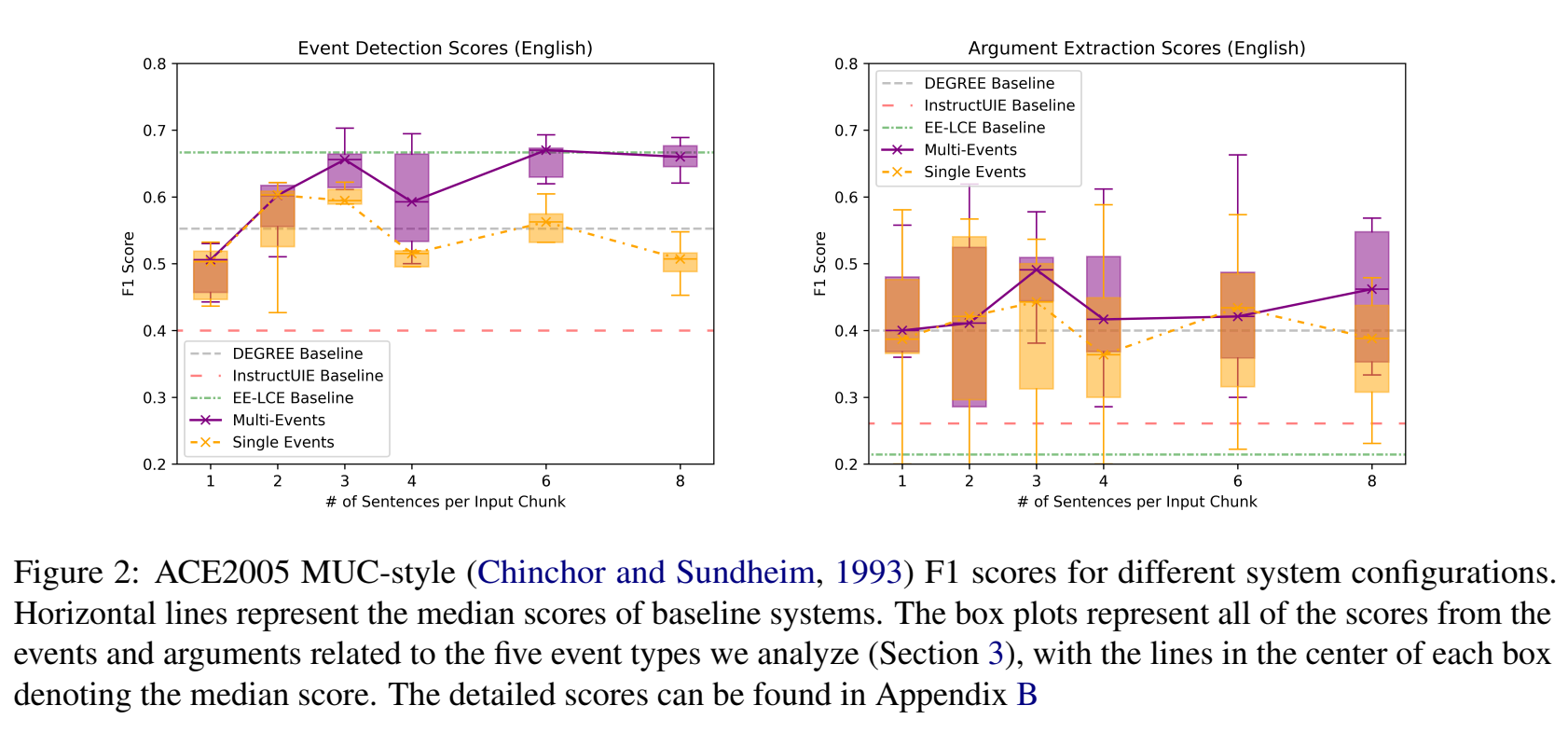

Our solution: modify the training algorithm for DEGREE such that it can produce multiple events. Not only did this work, but we were able to scale up well past three sentences (further improving performance) without a drop in quality due to this new capability:

Curious to learn more? Read the full paper here!

Footnotes

-

For an overview of terms like “triggers” and “arguments”, please watch the above-linked HLTCon talk ↩